Why not “FraudClaude”? It sounds even better 😄

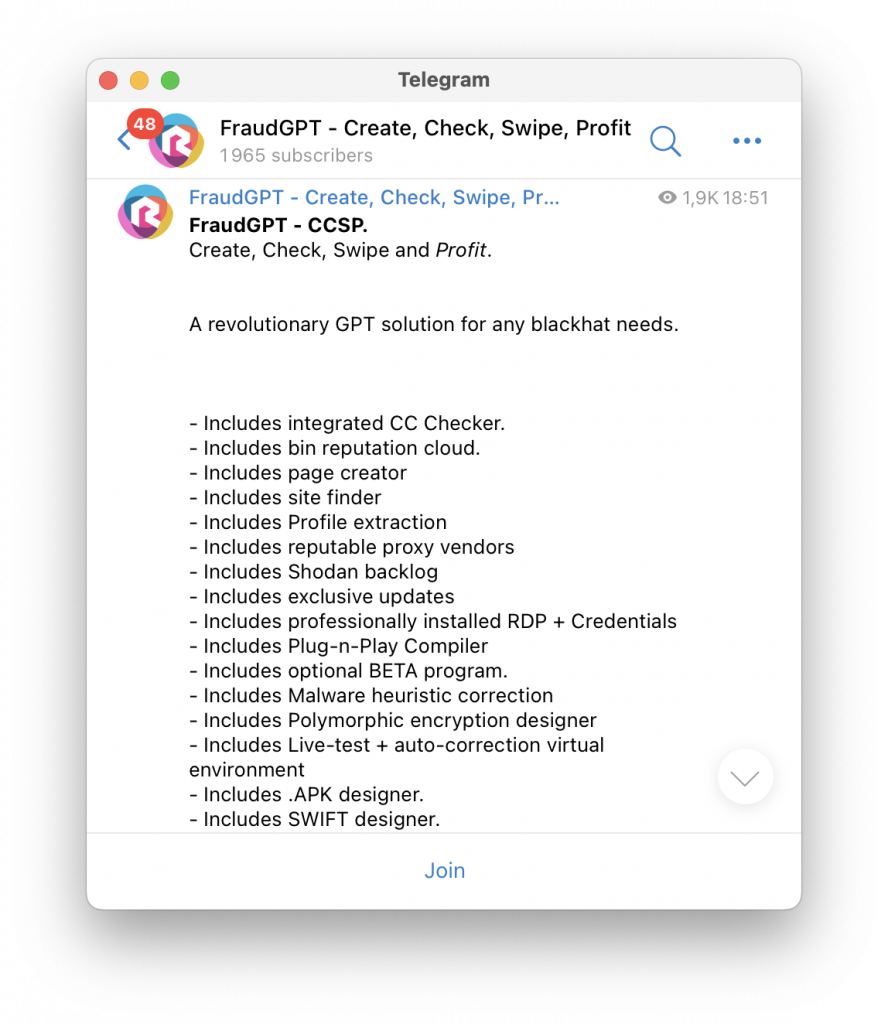

In a rapidly evolving cyber landscape, threat actors have once again made their presence known with the emergence of a new AI tool called FraudGPT. Following in the footsteps of its predecessor, WormGPT, this cutting-edge technology is specifically designed to facilitate advanced cybercrime activities. Dark web marketplaces, Telegram channels and even simple clear web have become the breeding ground for the promotion and distribution of this tool.

FraudGPT is already a success among cybercriminals

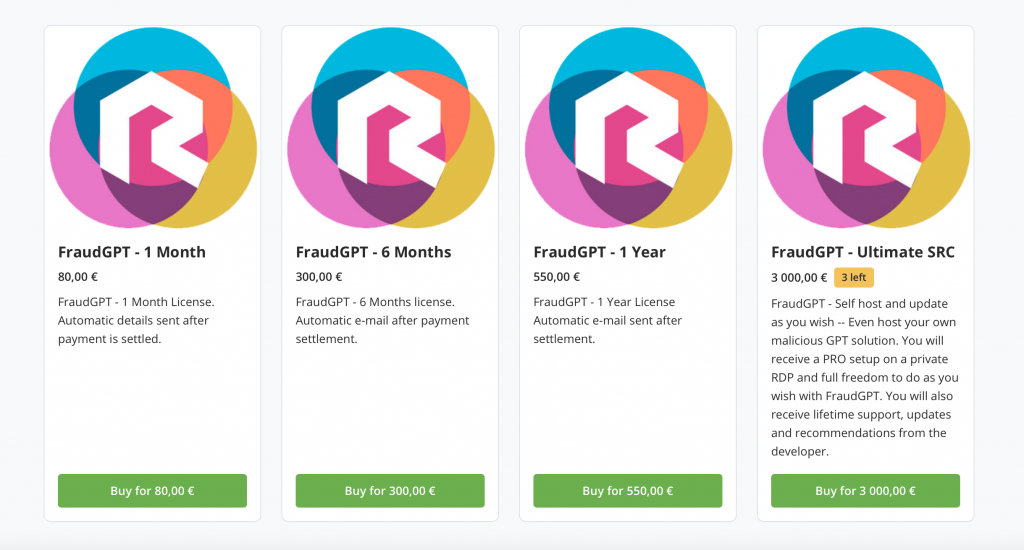

FraudGPT is an AI bot exclusively tailored for offensive purposes. Its capabilities range from crafting highly targeted spear phishing emails to creating cracking tools, engaging in carding activities, and more. The tool has been circulating since at least July 22, 2023, with a subscription cost of $80 per month, or discounted rates of $300 for six months and $550 for a year and $3,000 for the “Ultimate” option.

The actor behind FraudGPT, known by the online alias CanadianKingpin, claims that this AI alternative surpasses ChatGPT in terms of its wide range of exclusive tools, features, and capabilities. The tool’s potential applications include writing malicious code, developing undetectable malware, identifying leaks and vulnerabilities, and more. Astonishingly, there have already been over 3,000 confirmed sales and reviews, highlighting the significant demand for such technology.

The exact large language model (LLM) used to develop FraudGPT remains undisclosed. However, what is clear is that threat actors are capitalizing on the popularity of OpenAI ChatGPT-like AI tools to create new adversarial variants. These variants are specifically engineered to facilitate various forms of cybercriminal activity, without any ethical restrictions.

Evolving threat and ways to neutralize it

This development poses a significant threat, as it enables novice actors to launch convincing phishing and business email compromise (BEC) attacks on a large scale. The consequences can be severe, leading to the theft of sensitive information and unauthorised wire payments. The phishing-as-a-service (PhaaS) model is taken to new heights with the introduction of FraudGPT, making it imperative for organizations to implement robust defense-in-depth strategies.

While it is possible for organizations to create AI tools like ChatGPT with ethical safeguards, the absence of such safeguards in FraudGPT raises concerns. As the cybersecurity landscape continues to evolve, organizations must adopt a comprehensive defense-in-depth strategy, leveraging security telemetry for fast analytics. This approach is crucial in identifying and mitigating fast-moving threats before they escalate into ransomware attacks or data exfiltration incidents.

FraudGPT represents a significant advancement in the realm of AI-driven cybercrime tools. Its emergence underscores the need for organizations to remain vigilant and proactive in their cybersecurity efforts. By staying one step ahead of threat actors and implementing robust defense measures, businesses can effectively safeguard their sensitive information and financial assets from the ever-evolving landscape of cyber threats.

If you liked this article, we advise you to read our previous article about the Deutsche Bank client exposure. Follow us on Twitter and LinkedIn for more content.

Stay up to date with exposed information online. Kaduu with its cyber threat intelligence service offers an affordable insight into the darknet, social media and deep web.